Google raised the bar for artificial intelligence innovation at its annual I/O developer conference. The company revealed a suite of tools and technologies that signal a seismic shift in how we build, interact with, and deploy AI systems. From AI agents navigating the web to tools that design UIs and write code, here are the 11 most impactful announcements you need to know.

1. Gemini 2.5: Leading the AI Pack

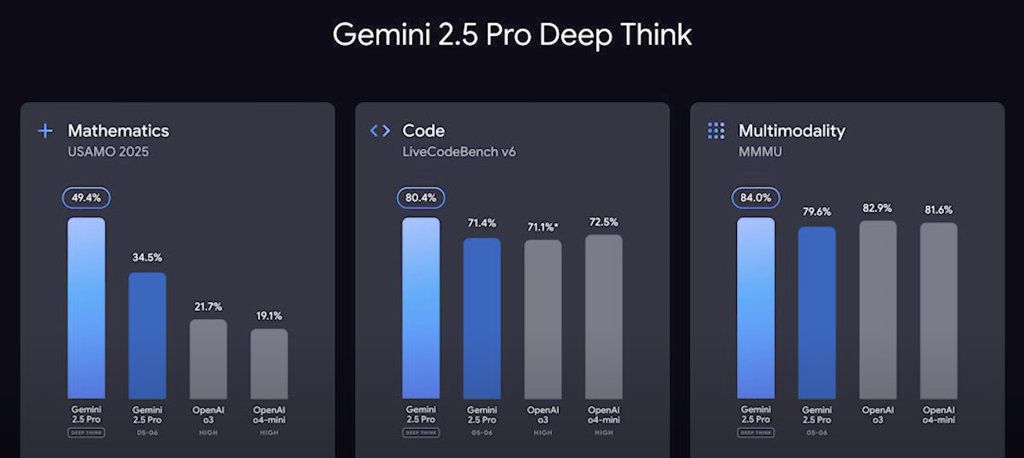

The spotlight was firmly on Gemini 2.5, Google’s most powerful AI model to date. It outperformed top-tier competitors across nearly every benchmark, establishing itself as the new standard in AI reasoning and task handling. Even the more affordable Gemini Flash variant delivered impressive results, surpassing what many high-end models offer today.

2. Browsers That Use the Web for You

In a bold move, Google introduced Gemini’s integration with Chrome, enabling it to autonomously interact with websites. This includes navigating pages, submitting forms, and even generating and posting responses online—marking a major step toward intelligent web automation.

3. AI-Powered UI Creation with Stitch

UI design could soon be fully automated thinks to Stitch, a new AI tool that can generate user interfaces without human intervention. This innovation could reshape front-end development, allowing for faster prototyping and design iteration.

4. Meet Jules: Your Autonomous Coding Assistant

Jules is Google’s agentic coding tool built to help automate software development. It can write and manage code to build entire AI workflows, significantly reducing the manual effort required from engineers and speeding up project timelines.

5. Flow: Cinematic Storytelling at Scale

Flow is a new creative platform built to generate video content with audio using AI. Targeted at filmmakers and content creators, Flow can produce entire cinematic sequences, potentially transforming how digital stories are crafted and shared.

6. Google’s Premium AI Access Plan

To access Gemini’s full capabilities, Google introduced the AI Ultra Plan. While it comes with a premium price tag, users gain access to advanced features, higher context limits, and broader functionality not available in the free or basic versions. It’s aimed at professionals who need the most from their AI tools.

7. Open-Sourced Gemma 3N for Developers

Google’s Gemma 3N series provides open access to powerful models for developers and businesses. With performance approaching leading models like Claude Sonnet 3.7, these open models are designed for integration into real-world applications and commercial products.

8. Project Astra: Real-Time Visual Understanding

Project Astra demonstrates real-time video analysis using AI. With a camera feed, Astra can interpret environments, identify objects, and offer useful contextual insights. Its future integration with smart glasses could enable on-the-go augmented AI assistance.

9. Project Beam: 2D to 3D Video Transformation

Project Beam converts flat video into immersive 3D content using generative AI. This tech could dramatically enhance video calls, virtual experiences, and creative content, making media more dynamic and lifelike.

10. Enhanced Generative Tools: VEO and Imagen

Google’s generative image and video tools, VEO and Imagen, received significant upgrades, including synchronised audio-video generation. This enables more coherent and expressive content generation, bridging the gap between visual and auditory storytelling.

11. Native Carousel Support in CSS

For web developers, a subtle yet impactful change: CSS now includes built-in carousel support. This allows developers to create sliders and galleries using only CSS, eliminating the need for complex JavaScript libraries.

What This Means for the Future

Google I/O 2025 marks a pivotal moment in AI evolution. The theme is clear: automation and agency. We’re seeing tools that not only generate code or content but can act—navigating websites, building applications, and creating immersive media with minimal human input.

The combination of open-source models, browser-based agents, and creative AI tools signals a future where developers, designers, and creators collaborate with intelligent systems, not just use them.